When to Talk, When to Click: A PM's Guide to AI Interfaces

Making Smart Choices in the Age of AI-Powered Interfaces

2024 marked a watershed moment in artificial intelligence, as large language models (LLMs) revolutionized how we interact with systems. While these AI systems demonstrated unprecedented abilities to understand natural language, generate human-like text, create stunning images, and even produce videos, they struggled with accessing private, organization-specific data that drives real business value.

As companies race to bridge this gap, Anthropic's Model Context Protocol emerged as a promising solution. It enables secure integration between AI models and enterprise systems.

As a product manager who has spent countless hours working in Jira, I was particularly intrigued by the possibility of using Claude, Anthropic's AI assistant, to streamline the often tedious process of Jira management.

What started as simple curiosity evolved into my exploration and testing of Claude and Jira integration, revealing both their potential and limitations. Let me walk you through my experience...

High Level Architecture and Setup

The integration follows an elegant three-part system that ensures both seamless communication and data security:

Claude's desktop application communicates with a local MCP server (Jira client)

The Jira client connects directly to Jira's APIs

All data remains secure through local processing

Claude Desktop 🔁 Jira Client 🔁 Jira

The Model Context Protocol is an open standard that enables developers to build secure, two-way connections between their data sources and AI-powered tools.

The setup involved

Claude desktop

Visual Studio Code

Setup Jira MCP Sever

MCP Server Configuration

The "Aha!" Moment

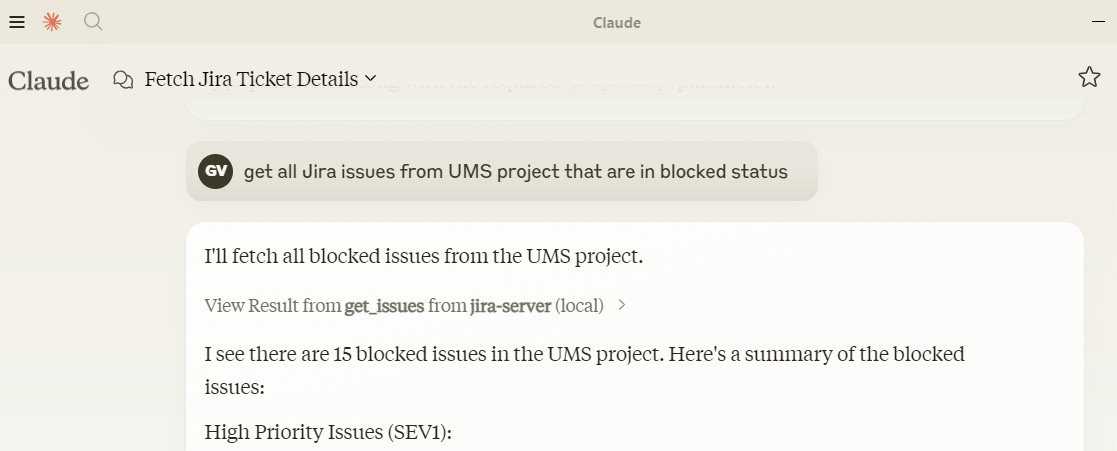

Once the setup was complete, I experienced the power of natural language queries with Jira. Instead of the usual clicking through filters and navigating complex interfaces, I simply typed: “get all Jira issues from UMS project that are in blocked status'’ Claude instantly translated this into the appropriate JQL and retrieved all blocked issues, making the process feel effortless.

Not only did I get a clear list grouped by priority, but I could then ask follow-up questions like "Which ticket is the oldest?" or "Who has the most blocked issues?" as naturally as asking another team member.

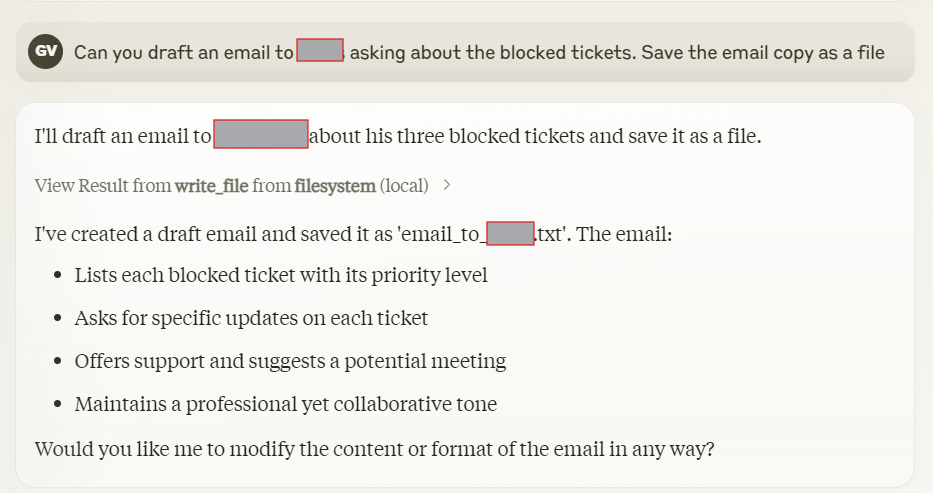

The real magic happened when I needed to draft an email to a team member about their blocked tickets. Instead of:

1. Export data to Excel

2. Filter relevant tickets

3. Copy-paste into email

4. Spend 15 minutes crafting the right tone

I just said, "Draft an email to John Doe asking about the blocked tickets" and got a perfectly structured, professional email that I could tweak and send.

Why This Matters for User Experience

Here's where it gets really interesting for product folks like us. Model Context Protocol (MCP) isn't just about making our lives easier (though that's a fantastic bonus!). It's about fundamentally transforming how users interact with software:

Natural Language > Menu Driven

Remember teaching your parents how to find settings in an app? With Claude+MCP, they can just ask, "How do I change my notification settings?" No more "Click the gear icon... no, the other gear icon..."

Context-Aware Assistance

The system understands context and previous interactions. When I asked about blocked tickets and then wanted to email about them, it remembered everything about those tickets. It's like having a super-smart assistant who's always paying attention.

Complex Tasks Made Simple

Want to analyze trends across multiple tickets? No need to export data and create pivot tables. Just ask, "What patterns do you see in our blocked tickets?" and get instant insights.

As a PM, I'm excited about what this means for product development:

Reduced learning curves for new users

We don’t need to design UI to answer all complex user needs. UI can be dynamically drawn based on the user’s questions

Users can achieve mult-step complex tasks through natural conversation

Increased accessibility for users who struggle with traditional interfaces

But here's what really blows my mind: this is just the beginning. Imagine when this technology is integrated into every application we use. Your CRM, your analytics tools, your design software - all of them becoming conversational partners in your daily work.

The Road Ahead: Is The Future Conversational?

As we move forward, AI and conversational interactions will become an important modality for system interaction, but will they completely replace menu-driven interfaces? Hold your horses before declaring the death of menu-driven interfaces! 🐎

Context is king when it comes to interface choice. Take driving, for instance - shouting 'Hey, play some Jazz!' is far safer and more natural than fumbling with touchscreen menus at 65mph. But flip the scenario to data analysis, and suddenly, that chatty AI might become the awkward choice. Nobody wants to say, 'Filter column B where values are greater than 100, then sort by descending order' when a few quick clicks would do the job.

There will be use cases where the conversation interface will be very natural. While conversational interfaces excel at complex queries and natural language interactions, traditional menu-driven interfaces remain superior for tasks requiring precision, repeatability, and visual feedback.

The future lies in thoughtfully combining both approaches to create more versatile and user-friendly systems.

For us PMs, this means:

Prioritize context over trends. Don't rush to add AI chat interfaces just because they're hot right now. Instead, deeply understand your users' context—are they mobile? Multitasking? Need precision? Let these insights drive interface decisions.

Think in hybrid experiences. The magic isn't in choosing between conversational or traditional interfaces—it's in weaving them together seamlessly.

Continuously measure and monitor the user experience. Since LLMs are non-deterministic by nature, implement graceful fallback mechanisms for cases when the AI cannot confidently determine user intent.

What role do you see conversational interfaces playing in your application's future? How might they complement your existing user experience?

Resources: